The growth of the global population, which is projected to reach 10 billion by 2050, is placing significant pressure on the agricultural sector to increase crop production and maximize yields. To address looming food shortages, two potential approaches have emerged: expanding land use and adopting large-scale farming, or embracing innovative practices and leveraging technological advancements to enhance productivity on existing farmland

Pushed by many obstacles to achieving desired farming productivity — limited land holdings, labor shortages, climate change, environmental issues, and diminishing soil fertility, to name a few, — the modern agricultural landscape is evolving, branching out in various innovative directions. Farming has certainly come a long way since hand plows or horse-drawn machinery. Each season brings new technologies designed to improve efficiency and capitalize on the harvest. However, both individual farmers and global agribusinesses often miss out on the opportunities that artificial intelligence in agriculture can offer to their farming methods.

At Intellias, we’ve worked with the agricultural sector for over 20 years, successfully implementing real-life technological solutions. Our focus has been on developing innovative systems for quality control, traceability, compliance practices, and more. Now, we will dive deeper into how new technologies can help your farming business move forward.

Benefits of AI in agriculture

Until recently, using the words AI and agriculture in the same sentence may have seemed like a strange combination. After all, agriculture has been the backbone of human civilization for millennia, providing sustenance as well as contributing to economic development, while even the most primitive AI only emerged several decades ago. Nevertheless, innovative ideas are being introduced in every industry, and agriculture is no exception. In recent years, the world has witnessed rapid advancements in agricultural technology, revolutionizing farming practices. These innovations are becoming increasingly essential as global challenges such as climate change, population growth together with resource scarcity threaten the sustainability of our food system. Introducing AI solves many challenges and helps to diminish many disadvantages of traditional farming.

Data-based decisions

The modern world is all about data. Organizations in the agricultural sector use data to obtain meticulous insights into every detail of the farming process, from understanding each acre of a field to monitoring the entire produce supply chain to gaining deep inputs on yields generation process. AI-powered predictive analytics is already paving the way into agribusinesses. Farmers can gather, then process more data in less time with AI. Additionally, AI can analyze market demand, forecast prices as well as determine optimal times for sowing and harvesting.

Artificial intelligence in agriculture can help explore the soil health to collect insights, monitor weather conditions, and recommend the application of fertilizer and pesticides. Farm management software boosts production together with profitability, enabling farmers to make better decisions at every stage of the crop cultivation process.

Cost savings

Improving farm yields is a constant goal for farmers. Combined with AI, precision agriculture can help farmers grow more crops with fewer resources. AI in farming combines the best soil management practices, variable rate technology, and the most effective data management practices to maximize yields while minimizing minimize spending.

Application of AI in agriculture provides farmers with real-time crop insights, helping them to identify which areas need irrigation, fertilization, or pesticide treatment. Innovative farming practices such as vertical agriculture can also increase food production while minimizing resource usage. Resulting in reduced use of herbicides, better harvest quality, higher profits alongside significant cost savings.

Automation impact

Agricultural work is hard, so labor shortages are nothing new. Thankfully, automation provides a solution without the need to hire more people. While mechanization transformed agricultural activities that demanded super-human sweat and draft animal labor into jobs that took just a few hours, a new wave of digital automation is once more revolutionizing the sector.

Automated farm machinery like driverless tractors, smart irrigation, fertilization systems, IoT-powered agricultural drones, smart spraying, vertical farming software, and AI-based greenhouse robots for harvesting are just some examples. Compared with any human farm worker, AI-driven tools are far more efficient and accurate.

Applications of artificial intelligence in agriculture

The AI in agriculture market is expected to grow from USD 1.7 billion in 2023 to USD 4.7 billion by 2028, according to MarketsandMarkets.

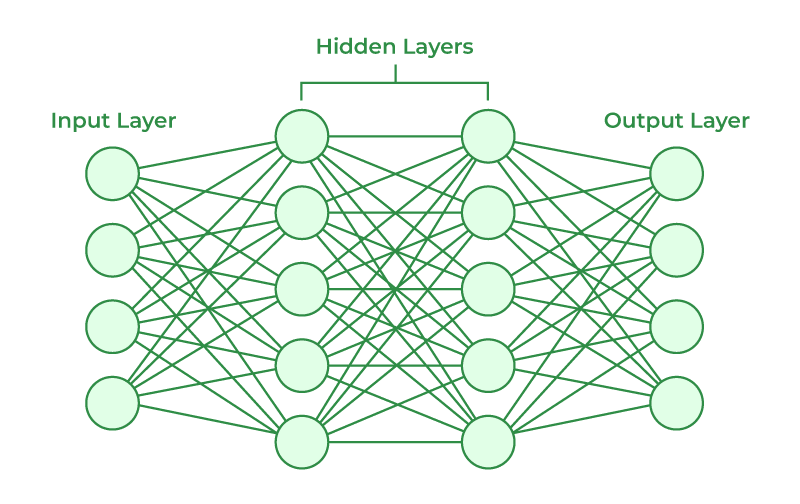

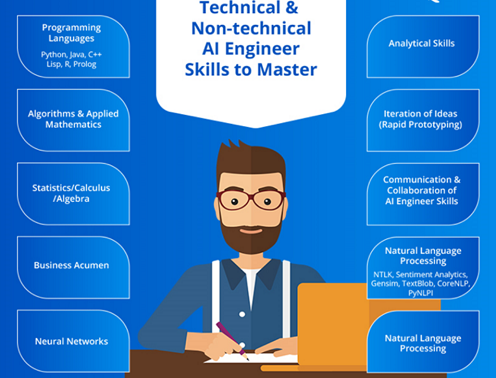

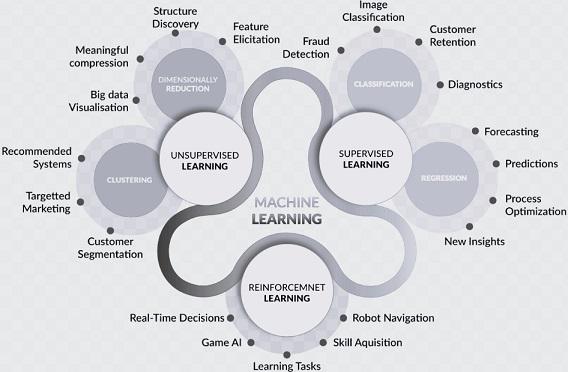

Traditional farming involves various manual processes. Implementing AI models can have many advantages in this respect. By complementing already adopted technologies, an intelligent agriculture system can facilitate many tasks. AI can collect and process big data, while determining and initiating the best course of action. Here are some common use cases for AI in agriculture:

Optimizing automated irrigation systems

AI algorithms enable autonomous crop management. When combined with IoT (Internet of Things) sensors that monitor soil moisture levels and weather conditions, algorithms can decide in real-time how much water to provide to crops. An autonomous crop irrigation system is designed to conserve water while promoting sustainable agriculture and farming practices. AI in smart greenhouses optimizes plant growth by automatically adjusting temperature, humidity, and light levels based on real-time data.

Detecting leaks or damage to irrigation systems

AI plays a crucial role in detecting leaks in irrigation systems. By analyzing data, algorithms can identify patterns and anomalies that indicate potential leaks. Machine learning (ML) models can be trained to recognize specific signatures of leaks, such as changes in water flow or pressure. Real-time monitoring and analysis enable early detection, preventing water waste together with potential crop damage.

AI also incorporates weather data alongside crop water requirements to identify areas with excessive water usage. By automating leak detection and providing alerts, AI technology enhances water efficiency helping farmers conserve resources.

Crop and soil monitoring

The wrong combination of nutrients in soil can seriously affect the health and growth of crops. Identifying these nutrients and determining their effects on crop yield with AI allows farmers to easily make the necessary adjustments.

While human observation is limited in its accuracy, computer vision models can monitor soil conditions to gather accurate data necessary for combatting crop diseases. This plant science data is then used to determine crop health, predict yields while flagging any particular issues. Plants start AI systems through sensors that detect their growth conditions, triggering automated adjustments to the environment.

In practice, AI in agriculture and farming has been able to accurately track the stages of wheat growth and the ripeness of tomatoes with a degree of speed and accuracy no human can match.

Detecting disease and pests

As well as detecting soil quality and crop growth, computer vision can detect the presence of pests or diseases. This works by using AI in agriculture projects to scan images to find mold, rot, insects, or other threats to crop health. In conjunction with alert systems, this helps farmers to act quickly in order to exterminate pests or isolate crops to prevent the spread of disease.

AI technology in agriculture has been used to detect apple black rot with an accuracy of over 90%. It can also identify insects like flies, bees, moths, etc., with the same degree of accuracy. However, researchers first needed to collect images of these insects to have the necessary size of the training data set to train the algorithm with.

Monitoring livestock health

It may seem easier to detect health problems in livestock than in crops, in fact, it’s particularly challenging. Thankfully, AI for farming can help with this. For example, a company called CattleEye has developed a solution that uses drones, cameras together with computer vision to monitor cattle health remotely. It detects atypical cattle behavior and identifies activities such as birthing.

CattleEye uses AI and ML solutions to determine the impact of diet alongside environmental conditions on livestock and provide valuable insights. This knowledge can help farmers improve the well-being of cattle to increase milk production.

Intelligent pesticide application

By now, farmers are well aware that the application of pesticides is ripe for optimization. Unfortunately, both manual and automated application processes have notable limitations. Applying pesticides manually offers increased precision in targeting specific areas, though it might be slow and difficult work. Automated pesticide spraying is quicker and less labor-intensive, but often lacks accuracy leading to environment contamination.

AI-powered drones provide the best advantages of each approach while avoiding their drawbacks. Drones use computer vision to determine the amount of pesticide to be sprayed on each area. While still in infancy, this technology is rapidly becoming more precise.

Yield mapping and predictive analytics

Yield mapping uses ML algorithms to analyze large datasets in real time. This helps farmers understand the patterns and characteristics of their crops, allowing for better planning. By combining techniques like 3D mapping, data from sensors and drones, farmers can predict soil yields for specific crops. Data is collected on multiple drone flights, enabling increasingly precise analysis with the use of algorithms.

These methods permit the accurate prediction of future yields for specific crops, helping farmers know where and when to sow seeds as well as how to allocate resources for the best return on investment.

Automatic weeding and harvesting

Similar to how computer vision can detect pests and diseases, it can also be used to detect weeds and invasive plant species. When combined with machine learning, computer vision analyzes the size, shape, and color of leaves to distinguish weeds from crops. Such solutions can be used to program robots that carry out robotic process automation (RPA) tasks, such as automatic weeding. In fact, such a robot has already been used effectively. As these technologies become more accessible, both weeding and harvesting crops could be carried out entirely by smart bots.

Sorting harvested produce

AI is not only useful for identifying potential issues with crops while they’re growing. It also has a role to play after produce has been harvested. Most sorting processes are traditionally carried out manually however AI can sort produce more accurately.

Computer vision can detect pests as well as disease in harvested crops. What’s more, it can grade produce based on its shape, size, and color. This enables farmers to quickly separate produce into categories — for example, to sell to different customers at different prices. In comparison, traditional manual sorting methods can be painstakingly labor-intensive.

Surveillance

Security is an important part of farm management. Farms are common targets for burglars, as it’s hard for farmers to monitor their fields around the clock. Animals are another threat — whether it’s foxes breaking into the chicken coop or a farmer’s own livestock damaging crops or equipment. When combined with video surveillance systems, computer vision and ML can quickly identify security breaches. Some systems are even advanced enough to distinguish employees from unauthorized visitors.

Role of AI in the agriculture information management cycle

Managing agricultural data with AI can be beneficial in many ways:

Risk management

Predictive analytics reduces errors in farming processes.

Plant breeding

AI utilized plant growth data to further advise on crops that are more resilient to extreme weather, disease or harmful pests.

Soil and crop health analysis

AI algorithms can analyze the chemical composition of soil samples to determine which nutrients may be lacking. AI can also identify or even predict crop diseases.

Crop feeding

AI in irrigation is useful for identifying optimal patterns and nutrient application times, while predicting the optimal mix of agronomic products.

Harvesting

AI is useful for enhancing crop yields and can even predict the best time to harvest crops.

Authors: Alina Piddubna

Register for this course: Enrol Now