E-Learn Knowledge Base

What Is Analysis of Variance (ANOVA)?

Analysis of variance (ANOVA) is a statistical test used to assess the difference between the means of more than two groups. At its core, ANOVA allows you to compare arithmetic means across groups simultaneously. You can determine whether the differences observed are due to random chance or if they reflect genuine, meaningful differences.

A one-way ANOVA uses one independent variable. A two-way ANOVA uses two independent variables. Analysts use the ANOVA test to determine the influence of independent variables on the dependent variable in a regression study.

While this can sound arcane to those new to statistics, the applications of ANOVA are as diverse as they are profound. From medical researchers investigating the efficacy of new treatments to marketers analyzing consumer preferences, ANOVA has become an indispensable tool for understanding complex systems and making data-driven decisions.

KEY TAKEAWAYS

- ANOVA is a statistical method that simultaneously compares means across several groups to determine if observed differences are due to chance or reflect genuine distinctions.

- A one-way ANOVA uses one independent variable. A two-way ANOVA uses two independent variables.

- By partitioning total variance into components, ANOVA unravels relationships between variables and identifies true sources of variation.

- ANOVA can handle multiple factors and their interactions, providing a robust way to better understand intricate relationships.

:max_bytes(150000):strip_icc():format(webp)/Analysis-of-Variance-046c809d7efa49d98ea53c4b232a86e8.jpg)

Xiaojie Liu / Investopedia

How ANOVA Works

An ANOVA test can be applied when data needs to be experimental. Analysis of variance is employed if there is no access to statistical software, and ANOVA must be calculated by hand. It's simple to use and best suited for small samples involving subjects, test groups, and between and within groups.

ANOVA is like several two-sample t-tests. However, it results in fewer Type I errors. ANOVA groups differences by comparing each group's means and includes spreading the variance into diverse sources.

Analysts use a one-way ANOVA with collected data about one independent variable and one dependent variable. A two-way ANOVA uses two independent variables. The independent variable should have at least three different groups or categories. ANOVA determines if the dependent variable changes according to the level of the independent variable.

Researchers might test students from several colleges to see if students from one of them consistently outperform those from other schools. In a business application, a research and development researcher might test two ways of creating a product to see if one is better than the other in cost efficiency.

ANOVA's versatility and ability to handle multiple variables make it a valuable tool for researchers and analysts across various fields. By comparing means and partitioning variance, ANOVA provides a robust way to understand the relationships between variables and identify significant differences among groups.

ANOVA Formula

F=MSEMSTwhere:F=ANOVA coefficientMST=Mean sum of squares due to treatmentMSE=Mean sum of squares due to error

History of ANOVA

The t- and z-test methods, developed in the 20th century, were used for statistical analysis. In 1918, Ronald Fisher created the analysis of variance method.1

For this reason, ANOVA is also called the Fisher analysis of variance, and it's an extension of the t- and z-tests. The term became well-known in 1925 after appearing in Fisher's book, "Statistical Methods for Research Workers." It was first employed in experimental psychology and later expanded to other subjects.

The ANOVA test is the first step in analyzing factors that affect a given data set. Once the test is finished, an analyst performs further testing on the factors that measurably might be contributing to the data's inconsistency. The analyst utilizes the ANOVA test results in an F-test to generate further data that aligns with the proposed regression models.

If you need reminders on these terms, here's a cheat sheet for many of the major statistical tests found in finance studies:

| Cheat Sheet on Common Statistical Tests in Finance and Investing | |||

|---|---|---|---|

| Test | Purpose | When to Use | Applications in Finance/Investing |

| ANCOVA | Compares the arithmetical means of two or more groups while controlling for the effects of a continuous variable | • Normal distribution • Comparing multiple independent variables with a covariate | • Analyzing investment returns while controlling for market volatility • Evaluating the effectiveness of financial strategies while accounting for economic conditions |

| ANOVA | Compares the means of three or more groups | • Data is normally distributed | • Comparing financial performance across different sectors or investment strategies |

| Chi-Square Test | Tests for association between two categorical variables (can't be measured on a numerical scale) | • Data is categorical (e.g., investment choices, market segments) | • Analyzing customer demographics and portfolio allocations |

| Correlation | Measures the strength and direction of a linear relationship between two variables | • Data is continuous | • Assessing risk and return of assets, portfolio diversification |

| Durbin-Watson Test | Checks if errors in a prediction model are related over time | • Time series data | • Detecting serial correlation in stock prices, market trends |

| F-Test | Compares the variances of two or more groups | • Data is normally distributed | • Testing the equality of variances in stock returns and portfolio performance |

| Granger Causality Test | Tests for a causal relationship between two time series | • Time series data | • Determining if one economic indicator predicts another |

| Jarque-Bera Test | Tests for normality of data | • Continuous data | • Assessing if financial data follows a normal distribution |

| Mann-Whitney U Test | Compares medians of two independent samples | • Data is not normally distributed | • Comparing the financial performance of two groups with non-normal distributions |

| MANOVA | Compares means of two or more groups on multiple dependent variables simultaneously | • Data is normally distributed • Analyzing multiple related outcome variables | • Assessing the impact of different investment portfolios on multiple financial metrics • Evaluating the overall financial health of companies based on various performance indicators |

| One-Sample T-Test | Compares a sample mean to a known population mean | • Data is normally distributed, or the sample size is large | • Comparing actual versus expected returns |

| Paired T-Test | Compares means of two related samples (e.g., before and after measurements) | • Data is normally distributed, or the sample size is large | • Evaluating if a financial change has been effective |

| Regression | Predicts the value of one variable based on the value of another variable | • Data is continuous | • Modeling stock prices • Predicting future returns |

| Sign Test | Tests for differences in medians between two related samples | • Data is not normally distributed | • Non-parametric alternative to the paired t-test in financial studies |

| T-Test | Compares the means of two groups | • Data is normally distributed, or the sample size is large | • Comparing the performance of two investment strategies |

| Wilcoxon Rank-Sum Test | Compares the medians of two independent samples | • Data is not normally distributed | • Non-parametric alternative to the independent t-test in finance |

| Z-Test | Compares a sample mean to a known population mean | • Data is normally distributed, and the population standard deviation is known | • Testing hypotheses about market averages |

What ANOVA Can Tell You

ANOVA splits the observed aggregate variability within a dataset into two parts: systematic factors and random factors. The systematic factors influence the given data set, while the random factors do not.

The ANOVA test lets you compare more than two groups simultaneously to determine whether a relationship exists between them. The result of the ANOVA formula, the F statistic or F-ratio, allows you to analyze several data groups to assess the variability between samples and within samples.

If no real difference exists between the tested groups, called the null hypothesis, the result of the ANOVA's F-ratio statistic will be close to one. The distribution of all possible values of the F statistic is the F-distribution. This is a group of distribution functions with two characteristic numbers, called the numerator degrees of freedom and the denominator degrees of freedom.

One-Way vs. Two-Way ANOVA

-

Uses one independent variable or factor

-

Assesses the impact of a single categorical variable on a continuous dependent variable, identifying significant differences among group means

-

Does not account for interactions

-

Uses two independent variables or factors

-

Used to not only understand the individual effects of two different factors but also how the combination of these two factors influences the outcome

-

Can test for interactions between factors

A one-way ANOVA evaluates the impact of a single factor on a single response variable. It determines whether all the samples are the same. The one-way ANOVA is used to determine whether there are any statistically significant differences between the means of three or more independent groups.

A two-way ANOVA is an extension of the one-way ANOVA. With a one-way design, one independent variable affects a dependent variable.

With a two-way ANOVA, there are two independent variables. For example, a two-way ANOVA allows a company to compare worker productivity based on two independent variables, such as salary and skill set. It's utilized to see the interaction between the two factors and test the effect of the two factors simultaneously.

Example of ANOVA

Suppose you want to assess the performance of different investment portfolios across various market conditions. The goal is to determine which portfolio strategy performs best under what conditions.

You have three portfolio strategies:

- Technology portfolio (tech stocks): High-risk, high-return

- Balanced portfolio (stocks and bonds): Moderate-risk, moderate return

- Fixed-income portfolio (bonds and money market instruments): Low-risk, low return

You also want to check against two market conditions:

- A bull market

- A bear market

A one-way ANOVA could give a broad overview of portfolio strategy performance, while a two-way ANOVA adds a deeper understanding by including the varying market conditions.

One-Way ANOVA

A one-way ANOVA could be used to initially analyze the performance differences among the three different portfolios without considering the impact of market conditions. The independent variable would be the type of investment portfolio, and the dependent variable would be the returns generated.

You would group the returns of the technology, balanced, and fixed-income portfolios for a preset period and compare the mean returns of the three portfolios to determine if there are statistically significant differences. This would help determine whether different investment strategies result in different returns, but it would not account for how different market conditions might influence these returns.

Two-Way ANOVA

Meanwhile, a two-way ANOVA would be more appropriate for analyzing both the effects of the investment portfolio and the market conditions, as well as any interaction between these two factors on the returns.

IMPORTANT

MANOVA (multivariate ANOVA) differs from ANOVA as it tests for several dependent variables simultaneously, while ANOVA assesses only one dependent variable at a time.

You would first need to group each portfolio's returns under both bull and bear market conditions. Next, you would compare the mean returns across both factors to determine the effect of the investment strategy on returns, the effect of market conditions on returns, and whether the effectiveness of a particular investment strategy depends on the market conditions.

Suppose the technology portfolio performs significantly better in bull markets but underperforms in bear markets, while the fixed-income portfolio provides stable returns regardless of the market. Looking at these interactions could help you see when it's best to advise using a technology portfolio and when a bear market means it's soundest to turn to a fixed-income portfolio.

How Does ANOVA Differ From a T-Test?

ANOVA differs from t-tests in that ANOVA can compare three or more groups, while t-tests are only useful for comparing two groups at a time.

What Is Analysis of Covariance (ANCOVA)?

Analysis of covariance combines ANOVA and regression. It can be useful for understanding the within-group variance that ANOVA tests do not explain.

Does ANOVA Rely on Any Assumptions?

Yes, ANOVA tests assume that the data is normally distributed and that variance levels in each group are roughly equal. Finally, it assumes that all observations are made independently. If these assumptions are inaccurate, ANOVA may not be useful for comparing groups.

The Bottom Line

ANOVA is a robust statistical tool that allows researchers and analysts to simultaneously compare arithmetic means across multiple groups.

By dividing variance into different sources, ANOVA helps identify significant differences and uncover meaningful relationships between variables. Its versatility and ability to handle various factors make it an essential tool for many fields that use statistics, including finance and investing.

Understanding ANOVA's principles, forms, and applications is crucial for leveraging this technique effectively. Whether using a one-way or two-way ANOVA, researchers can gain greater clarity about complex systems to make data-driven decisions.

As with any statistical method, it's essential to interpret the results carefully and consider the context and limitations of the analysis.

Authors: Will Kenton, T. C. OkennaRegister for this course: Enrol Now

Data Analysis Techniques

Data analytics is the process of analyzing raw data to draw out meaningful insights. These insights are then used to determine the best course of action.

When is the best time to roll out that marketing campaign? Is the current team structure as effective as it could be? Which customer segments are most likely to purchase your new product?

Ultimately, data analytics is a crucial driver of any successful business strategy. But how do data analysts actually turn raw data into something useful? There are a range of methods and techniques that data analysts use depending on the type of data in question and the kinds of insights they want to uncover.

1. What is data analysis and why is it important?

Data analysis is, put simply, the process of discovering useful information by evaluating data. This is done through a process of inspecting, cleaning, transforming, and modeling data using analytical and statistical tools, which we will explore in detail further along in this article.

Why is data analysis important? Analyzing data effectively helps organizations make business decisions. Nowadays, data is collected by businesses constantly: through surveys, online tracking, online marketing analytics, collected subscription and registration data (think newsletters), social media monitoring, among other methods.

These data will appear as different structures, including—but not limited to—the following:

Big data

The concept of big data—data that is so large, fast, or complex, that it is difficult or impossible to process using traditional methods—gained momentum in the early 2000s. Then, Doug Laney, an industry analyst, articulated what is now known as the mainstream definition of big data as the three Vs: volume, velocity, and variety.

- Volume: As mentioned earlier, organizations are collecting data constantly. In the not-too-distant past it would have been a real issue to store, but nowadays storage is cheap and takes up little space.

- Velocity: Received data needs to be handled in a timely manner. With the growth of the Internet of Things, this can mean these data are coming in constantly, and at an unprecedented speed.

- Variety: The data being collected and stored by organizations comes in many forms, ranging from structured data—that is, more traditional, numerical data—to unstructured data—think emails, videos, audio, and so on. We’ll cover structured and unstructured data a little further on.

Metadata

This is a form of data that provides information about other data, such as an image. In everyday life you’ll find this by, for example, right-clicking on a file in a folder and selecting “Get Info”, which will show you information such as file size and kind, date of creation, and so on.

Real-time data

This is data that is presented as soon as it is acquired. A good example of this is a stock market ticket, which provides information on the most-active stocks in real time.

Machine data

This is data that is produced wholly by machines, without human instruction. An example of this could be call logs automatically generated by your smartphone.

Quantitative and qualitative data

Quantitative data—otherwise known as structured data— may appear as a “traditional” database—that is, with rows and columns. Qualitative data—otherwise known as unstructured data—are the other types of data that don’t fit into rows and columns, which can include text, images, videos and more. We’ll discuss this further in the next section.

2. What is the difference between quantitative and qualitative data?

How you analyze your data depends on the type of data you’re dealing with—quantitative or qualitative. So what’s the difference?

Quantitative data is anything measurable, comprising specific quantities and numbers. Some examples of quantitative data include sales figures, email click-through rates, number of website visitors, and percentage revenue increase. Quantitative data analysis techniques focus on the statistical, mathematical, or numerical analysis of (usually large) datasets. This includes the manipulation of statistical data using computational techniques and algorithms. Quantitative analysis techniques are often used to explain certain phenomena or to make predictions.

Qualitative data cannot be measured objectively, and is therefore open to more subjective interpretation. Some examples of qualitative data include comments left in response to a survey question, things people have said during interviews, tweets and other social media posts, and the text included in product reviews. With qualitative data analysis, the focus is on making sense of unstructured data (such as written text, or transcripts of spoken conversations). Often, qualitative analysis will organize the data into themes—a process which, fortunately, can be automated.

Data analysts work with both quantitative and qualitative data, so it’s important to be familiar with a variety of analysis methods. Let’s take a look at some of the most useful techniques now.

3. Data analysis techniques

Now we’re familiar with some of the different types of data, let’s focus on the topic at hand: different methods for analyzing data.

a. Regression analysis

Regression analysis is used to estimate the relationship between a set of variables. When conducting any type of regression analysis, you’re looking to see if there’s a correlation between a dependent variable (that’s the variable or outcome you want to measure or predict) and any number of independent variables (factors which may have an impact on the dependent variable). The aim of regression analysis is to estimate how one or more variables might impact the dependent variable, in order to identify trends and patterns. This is especially useful for making predictions and forecasting future trends.

Let’s imagine you work for an ecommerce company and you want to examine the relationship between: (a) how much money is spent on social media marketing, and (b) sales revenue. In this case, sales revenue is your dependent variable—it’s the factor you’re most interested in predicting and boosting. Social media spend is your independent variable; you want to determine whether or not it has an impact on sales and, ultimately, whether it’s worth increasing, decreasing, or keeping the same. Using regression analysis, you’d be able to see if there’s a relationship between the two variables. A positive correlation would imply that the more you spend on social media marketing, the more sales revenue you make. No correlation at all might suggest that social media marketing has no bearing on your sales. Understanding the relationship between these two variables would help you to make informed decisions about the social media budget going forward. However: It’s important to note that, on their own, regressions can only be used to determine whether or not there is a relationship between a set of variables—they don’t tell you anything about cause and effect. So, while a positive correlation between social media spend and sales revenue may suggest that one impacts the other, it’s impossible to draw definitive conclusions based on this analysis alone.

There are many different types of regression analysis, and the model you use depends on the type of data you have for the dependent variable. For example, your dependent variable might be continuous (i.e. something that can be measured on a continuous scale, such as sales revenue in USD), in which case you’d use a different type of regression analysis than if your dependent variable was categorical in nature (i.e. comprising values that can be categorised into a number of distinct groups based on a certain characteristic, such as customer location by continent). You can learn more about different types of dependent variables and how to choose the right regression analysis in this guide.

Regression analysis in action: Investigating the relationship between clothing brand Benetton’s advertising expenditure and sales

b. Monte Carlo simulation

When making decisions or taking certain actions, there are a range of different possible outcomes. If you take the bus, you might get stuck in traffic. If you walk, you might get caught in the rain or bump into your chatty neighbor, potentially delaying your journey. In everyday life, we tend to briefly weigh up the pros and cons before deciding which action to take; however, when the stakes are high, it’s essential to calculate, as thoroughly and accurately as possible, all the potential risks and rewards.

Monte Carlo simulation, otherwise known as the Monte Carlo method, is a computerized technique used to generate models of possible outcomes and their probability distributions. It essentially considers a range of possible outcomes and then calculates how likely it is that each particular outcome will be realized. The Monte Carlo method is used by data analysts to conduct advanced risk analysis, allowing them to better forecast what might happen in the future and make decisions accordingly.

So how does Monte Carlo simulation work, and what can it tell us? To run a Monte Carlo simulation, you’ll start with a mathematical model of your data—such as a spreadsheet. Within your spreadsheet, you’ll have one or several outputs that you’re interested in; profit, for example, or number of sales. You’ll also have a number of inputs; these are variables that may impact your output variable. If you’re looking at profit, relevant inputs might include the number of sales, total marketing spend, and employee salaries. If you knew the exact, definitive values of all your input variables, you’d quite easily be able to calculate what profit you’d be left with at the end. However, when these values are uncertain, a Monte Carlo simulation enables you to calculate all the possible options and their probabilities. What will your profit be if you make 100,000 sales and hire five new employees on a salary of $50,000 each? What is the likelihood of this outcome? What will your profit be if you only make 12,000 sales and hire five new employees? And so on. It does this by replacing all uncertain values with functions which generate random samples from distributions determined by you, and then running a series of calculations and recalculations to produce models of all the possible outcomes and their probability distributions. The Monte Carlo method is one of the most popular techniques for calculating the effect of unpredictable variables on a specific output variable, making it ideal for risk analysis.

Monte Carlo simulation in action: A case study using Monte Carlo simulation for risk analysis

c. Factor analysis

Factor analysis is a technique used to reduce a large number of variables to a smaller number of factors. It works on the basis that multiple separate, observable variables correlate with each other because they are all associated with an underlying construct. This is useful not only because it condenses large datasets into smaller, more manageable samples, but also because it helps to uncover hidden patterns. This allows you to explore concepts that cannot be easily measured or observed—such as wealth, happiness, fitness, or, for a more business-relevant example, customer loyalty and satisfaction.

Let’s imagine you want to get to know your customers better, so you send out a rather long survey comprising one hundred questions. Some of the questions relate to how they feel about your company and product; for example, “Would you recommend us to a friend?” and “How would you rate the overall customer experience?” Other questions ask things like “What is your yearly household income?” and “How much are you willing to spend on skincare each month?”

Once your survey has been sent out and completed by lots of customers, you end up with a large dataset that essentially tells you one hundred different things about each customer (assuming each customer gives one hundred responses). Instead of looking at each of these responses (or variables) individually, you can use factor analysis to group them into factors that belong together—in other words, to relate them to a single underlying construct. In this example, factor analysis works by finding survey items that are strongly correlated. This is known as covariance. So, if there’s a strong positive correlation between household income and how much they’re willing to spend on skincare each month (i.e. as one increases, so does the other), these items may be grouped together. Together with other variables (survey responses), you may find that they can be reduced to a single factor such as “consumer purchasing power”. Likewise, if a customer experience rating of 10/10 correlates strongly with “yes” responses regarding how likely they are to recommend your product to a friend, these items may be reduced to a single factor such as “customer satisfaction”.

In the end, you have a smaller number of factors rather than hundreds of individual variables. These factors are then taken forward for further analysis, allowing you to learn more about your customers (or any other area you’re interested in exploring).

Factor analysis in action: Using factor analysis to explore customer behavior patterns in Tehran

d. Cohort analysis

Cohort analysis is a data analytics technique that groups users based on a shared characteristic, such as the date they signed up for a service or the product they purchased. Once users are grouped into cohorts, analysts can track their behavior over time to identify trends and patterns.

So what does this mean and why is it useful? Let’s break down the above definition further. A cohort is a group of people who share a common characteristic (or action) during a given time period. Students who enrolled at university in 2020 may be referred to as the 2020 cohort. Customers who purchased something from your online store via the app in the month of December may also be considered a cohort.

With cohort analysis, you’re dividing your customers or users into groups and looking at how these groups behave over time. So, rather than looking at a single, isolated snapshot of all your customers at a given moment in time (with each customer at a different point in their journey), you’re examining your customers’ behavior in the context of the customer lifecycle. As a result, you can start to identify patterns of behavior at various points in the customer journey—say, from their first ever visit to your website, through to email newsletter sign-up, to their first purchase, and so on. As such, cohort analysis is dynamic, allowing you to uncover valuable insights about the customer lifecycle.

This is useful because it allows companies to tailor their service to specific customer segments (or cohorts). Let’s imagine you run a 50% discount campaign in order to attract potential new customers to your website. Once you’ve attracted a group of new customers (a cohort), you’ll want to track whether they actually buy anything and, if they do, whether or not (and how frequently) they make a repeat purchase. With these insights, you’ll start to gain a much better understanding of when this particular cohort might benefit from another discount offer or retargeting ads on social media, for example. Ultimately, cohort analysis allows companies to optimize their service offerings (and marketing) to provide a more targeted, personalized experience. You can learn more about how to run cohort analysis using Google Analytics.

Cohort analysis in action: How Ticketmaster used cohort analysis to boost revenue

e. Cluster analysis

Cluster analysis is an exploratory technique that seeks to identify structures within a dataset. The goal of cluster analysis is to sort different data points into groups (or clusters) that are internally homogeneous and externally heterogeneous. This means that data points within a cluster are similar to each other, and dissimilar to data points in another cluster. Clustering is used to gain insight into how data is distributed in a given dataset, or as a preprocessing step for other algorithms.

There are many real-world applications of cluster analysis. In marketing, cluster analysis is commonly used to group a large customer base into distinct segments, allowing for a more targeted approach to advertising and communication. Insurance firms might use cluster analysis to investigate why certain locations are associated with a high number of insurance claims. Another common application is in geology, where experts will use cluster analysis to evaluate which cities are at greatest risk of earthquakes (and thus try to mitigate the risk with protective measures).

It’s important to note that, while cluster analysis may reveal structures within your data, it won’t explain why those structures exist. With that in mind, cluster analysis is a useful starting point for understanding your data and informing further analysis. Clustering algorithms are also used in machine learning

Cluster analysis in action: Using cluster analysis for customer segmentation—a telecoms case study example

f. Time series analysis

Time series analysis is a statistical technique used to identify trends and cycles over time. Time series data is a sequence of data points which measure the same variable at different points in time (for example, weekly sales figures or monthly email sign-ups). By looking at time-related trends, analysts are able to forecast how the variable of interest may fluctuate in the future.

When conducting time series analysis, the main patterns you’ll be looking out for in your data are:

- Trends: Stable, linear increases or decreases over an extended time period.

- Seasonality: Predictable fluctuations in the data due to seasonal factors over a short period of time. For example, you might see a peak in swimwear sales in summer around the same time every year.

- Cyclic patterns: Unpredictable cycles where the data fluctuates. Cyclical trends are not due to seasonality, but rather, may occur as a result of economic or industry-related conditions.

As you can imagine, the ability to make informed predictions about the future has immense value for business. Time series analysis and forecasting is used across a variety of industries, most commonly for stock market analysis, economic forecasting, and sales forecasting. There are different types of time series models depending on the data you’re using and the outcomes you want to predict. These models are typically classified into three broad types: the autoregressive (AR) models, the integrated (I) models, and the moving average (MA) models.

Time series analysis in action: Developing a time series model to predict jute yarn demand in Bangladesh

g. Sentiment analysis

When you think of data, your mind probably automatically goes to numbers and spreadsheets.

Many companies overlook the value of qualitative data, but in reality, there are untold insights to be gained from what people (especially customers) write and say about you. So how do you go about analyzing textual data?

One highly useful qualitative technique is sentiment analysis, a technique which belongs to the broader category of text analysis—the (usually automated) process of sorting and understanding textual data.

With sentiment analysis, the goal is to interpret and classify the emotions conveyed within textual data. From a business perspective, this allows you to ascertain how your customers feel about various aspects of your brand, product, or service.

There are several different types of sentiment analysis models, each with a slightly different focus. The three main types include:

Fine-grained sentiment analysis

If you want to focus on opinion polarity (i.e. positive, neutral, or negative) in depth, fine-grained sentiment analysis will allow you to do so.

For example, if you wanted to interpret star ratings given by customers, you might use fine-grained sentiment analysis to categorize the various ratings along a scale ranging from very positive to very negative.

Emotion detection

This model often uses complex machine learning algorithms to pick out various emotions from your textual data.

You might use an emotion detection model to identify words associated with happiness, anger, frustration, and excitement, giving you insight into how your customers feel when writing about you or your product on, say, a product review site.

Aspect-based sentiment analysis

This type of analysis allows you to identify what specific aspects the emotions or opinions relate to, such as a certain product feature or a new ad campaign.

If a customer writes that they “find the new Instagram advert so annoying”, your model should detect not only a negative sentiment, but also the object towards which it’s directed.

In a nutshell, sentiment analysis uses various Natural Language Processing (NLP) algorithms and systems which are trained to associate certain inputs (for example, certain words) with certain outputs.

For example, the input “annoying” would be recognized and tagged as “negative”. Sentiment analysis is crucial to understanding how your customers feel about you and your products, for identifying areas for improvement, and even for averting PR disasters in real-time!

Sentiment analysis in action: 5 Real-world sentiment analysis case studies

4. The data analysis process

Data analysis process generally consists of the following phases:

Defining the question

The first step for any data analyst will be to define the objective of the analysis, sometimes called a ‘problem statement’. Essentially, you’re asking a question with regards to a business problem you’re trying to solve. Once you’ve defined this, you’ll then need to determine which data sources will help you answer this question.

Collecting the data

Now that you’ve defined your objective, the next step will be to set up a strategy for collecting and aggregating the appropriate data. Will you be using quantitative (numeric) or qualitative (descriptive) data? Do these data fit into first-party, second-party, or third-party data?

Cleaning the data

Unfortunately, your collected data isn’t automatically ready for analysis—you’ll have to clean it first. As a data analyst, this phase of the process will take up the most time. During the data cleaning process, you will likely be:

- Removing major errors, duplicates, and outliers

- Removing unwanted data points

- Structuring the data—that is, fixing typos, layout issues, etc.

- Filling in major gaps in data

Analyzing the data

Now that we’ve finished cleaning the data, it’s time to analyze it! Many analysis methods have already been described in this article, and it’s up to you to decide which one will best suit the assigned objective. It may fall under one of the following categories:

- Descriptive analysis, which identifies what has already happened

- Diagnostic analysis, which focuses on understanding why something has happened

- Predictive analysis, which identifies future trends based on historical data

- Prescriptive analysis, which allows you to make recommendations for the future

Visualizing and sharing your findings

We’re almost at the end of the road! Analyses have been made, insights have been gleaned—all that remains to be done is to share this information with others. This is usually done with a data visualization tool, such as Google Charts, or Tableau.

Authors: T. C. OkennaRegister for this course: Enrol Now

What is a correlation analysis?

Correlation analysis is a statistical method used to evaluate the relationship between two variables, such as the association between body size and shoe size.

The strength of this relationship is measured by the correlation coefficient, which ranges from -1 to +1. A coefficient close to +1 indicates a strong positive correlation, while a value near -1 signifies a strong negative correlation. Values around zero suggest little to no relationship. Correlation analyses can thus be used to make a statement about the strength and direction of the correlation.

Example

You want to find out whether there is a connection between the age at which a child speaks its first sentences and its later success at school.

Correlation and causality

If correlation analysis reveals a relationship between two variables, it is possible to further investigate whether one variable can be used to predict the other. For instance, if a correlation is found, one could examine whether the age at which a child first speaks sentences can be used to predict their future academic success through linear regression analysis.

However, caution is necessary! Correlations do not imply causation. Any identified correlations should be examined in greater detail and not immediately interpreted as causal relationships, even if a connection seems obvious.

Correlation and causality example:

If the correlation between sales figures and price is analysed and a strong correlation is identified, it would be logical to assume that sales figures are influenced by the price (and not vice versa). This assumption can, however, by no means be proven on the basis of a correlation analysis.

However, in some cases, the nature of the variables allows for a causal relationship to be assumed from the outset. For example, if a correlation is found between age and salary, it is evident that age influences salary rather than the reverse—otherwise, it would imply that reducing one's salary could somehow make a person younger, which is clearly nonsensical.

Interpret correlation

With the help of correlation analysis two statements can be made:

- one about the direction

- and one about the strength

of the linear relationship between two metric or ordinally scaled variables. The direction indicates whether the correlation is positive or negative, while the strength indicates whether the correlation between the variables is strong or weak.

Positive correlation

A positive correlation exists if larger values of the variable x are accompanied by larger values of the variable y, and the other way around. Height and shoe size, for example, correlate positively and the correlation coefficient lies between 0 and 1, i.e. a positive value.

Negative correlation

A negative correlation exists if larger values of the variable x are accompanied by smaller values of the variable y, and the other way around. The product price and the sales quantity usually have a negative correlation; the more expensive a product is, the smaller the sales quantity. In this case, the correlation coefficient is between -1 and 0, so it assumes a negative value.

Strength of correlation

With regard to the strength of the correlation coefficient r, the following table can be used as a guide:

| | r | | Strength of correlation |

|---|---|

| 0.0 < 0.1 | no correlation |

| 0.1 < 0.3 | little correlation |

| 0.3 < 0.5 | medium correlation |

| 0.5 < 0.7 | high correlation |

| 0.7 < 1 | very high correlation |

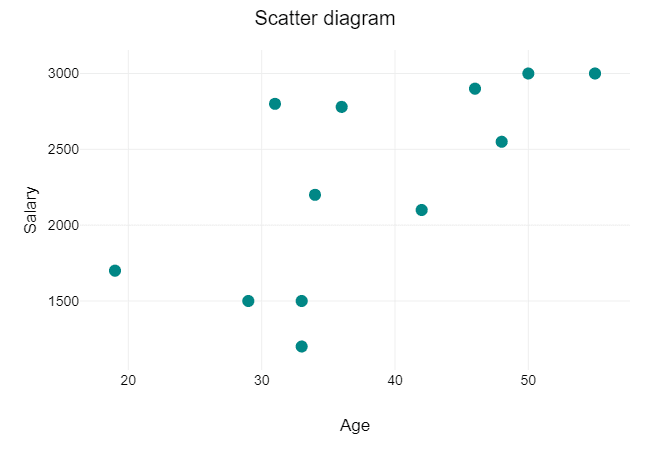

Scatter plot and correlation

Just as important as the consideration of the correlation coefficient is the graphical consideration of the correlation of two variables in a scatter diagram.

The scatter plot gives you a rough estimate of whether there is a correlation, whether it is linear or nonlinear, and whether there are outliers.

Authors: T. C. OkennaRegister for this course: Enrol Now

Register for this course: Enrol Now

scipy.stats module, for working with various probability distributions.-

Example:Calculating the probability of a value falling within a range in a standard normal distribution (mean=0, std dev=1).Pythonfrom scipy.stats import norm # Probability of a value being less than 1.96 prob_less_than = norm.cdf(1.96) print(f"Probability of Z < 1.96: {prob_less_than:.4f}") # Value corresponding to the 97.5th percentile cutoff_value = norm.ppf(0.975) print(f"Value at 97.5th percentile: {cutoff_value:.4f}")

- Example: Probability of getting exactly 7 heads in 10 coin flips (fair coin).

- from scipy.stats import binom n = 10 # Number of trials (coin flips) p = 0.5 # Probability of success (getting a head) k = 7 # Number of successes (heads) prob_7_heads = binom.pmf(k, n, p) print(f"Probability of getting 7 heads in 10 flips: {prob_7_heads:.4f}")

-

Example:Probability of a certain number of customer arrivals in an hour, given an average arrival rate.from scipy.stats import poisson mu = 3 # Average number of events per interval k = 2 # Number of events of interest prob_2_arrivals = poisson.pmf(k, mu) print(f"Probability of 2 arrivals when average is 3: {prob_2_arrivals:.4f}")

- Example: Probability of a random number between 0 and 10 being between 2 and 5.

- from scipy.stats import uniform low = 0 high = 10 prob_range = uniform.cdf(5, loc=low, scale=high-low) - uniform.cdf(2, loc=low, scale=high-low) print(f"Probability of a value between 2 and 5 in a uniform(0,10) distribution: {prob_range:.4f}")

Register for this course: Enrol Now