E-Learn Knowledge Base

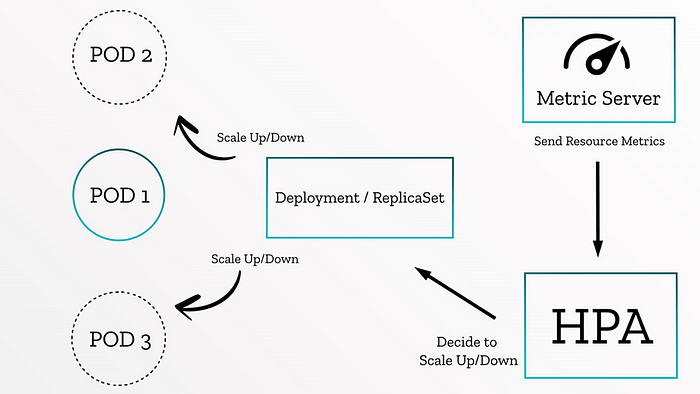

Kubernetes Horizontal Pod Autoscaling is another handy resource of kubernetes which helps you in autoscaling and managing your pods when there are overwhelming loads on your pods and the pods reach the defined limits.

As a DevOps engineer you might have experienced exceeding resources like CPU and Ram on your workload and this is considered pretty normal, but things will be messed up when you are absent to take control of pods. Fortunately, HPA perfectly fits this issue and it auto scales your pods when resource usage meets the limits..

This is how Kubernetes HPA work, the metric server sends metrics of resource consumption to HPA and based on the rules you have defined in HPA manifest file, this object decides to scale up or down the pods. For example, if the CPU usage was more than 80 percentage, the HPA order replica Set and deployment to scale up pods and if the usage came below 10 percentage, the additional pods will be removed.

Let’s get our hands dirty and deploy a simple project with Kubernetes horizontal auto scaling step by step:

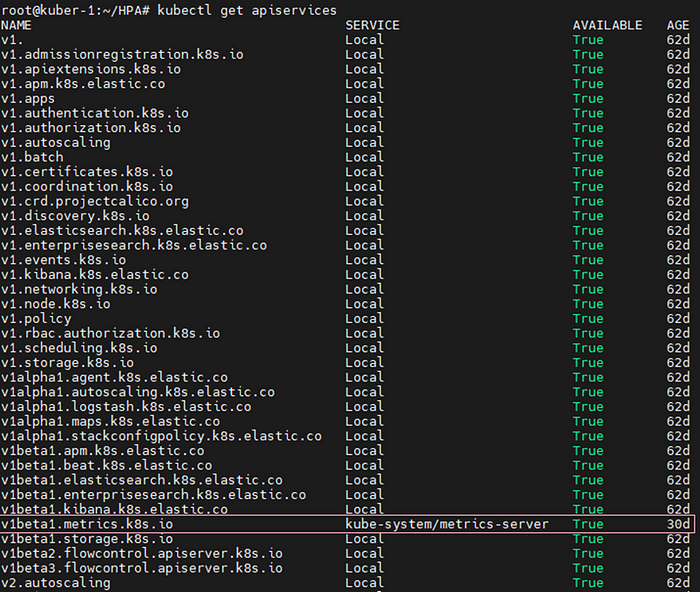

1. Deploy Metric Server

Metric server is an additional module of Kubernetes which is part of Kubernetes-sigs repository. Kubernetes metric server acts like a metric exporter on Kubernetes cluster that expose resource usage of nodes, pods … for many kinds of purposes. As mentioned before, HPA use metric server to observe pods resource usage.

To deploy the metric server, get the latest manifest file of metric server from here and add these parameters on the metric server deployment file and run.

spec: hostNetwork: true containers: - args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --metric-resolution=15s - --kubelet-insecure-tls

kubectl apply -f metric-server.yaml file

After deploying the manifest file, check the availability of metric server with the below command. Remember the marked API must be visible and True.

Now you can see the pods and nodes metrics with these two commands

kubectl top pods

kubectl top nodes

2. Deploy an application

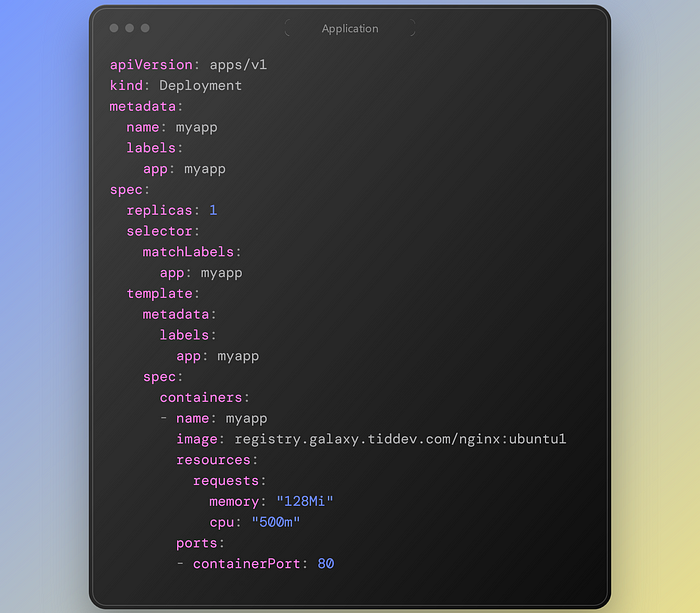

Deploying a containerized application is a must to test HPA which can be anything you want but you have to determine resource limitation or request parameters on the manifest Yaml file.

resources: limits: memory: "128Mi" cpu: "500m"

In my example I have a simple web server and defined limits on my resources.

3. Creating HPA

After deploying your application, it is time for creating HPA manifest.

In this article I have mentioned both versions of autoscaling/v1 and autoscaling/v2

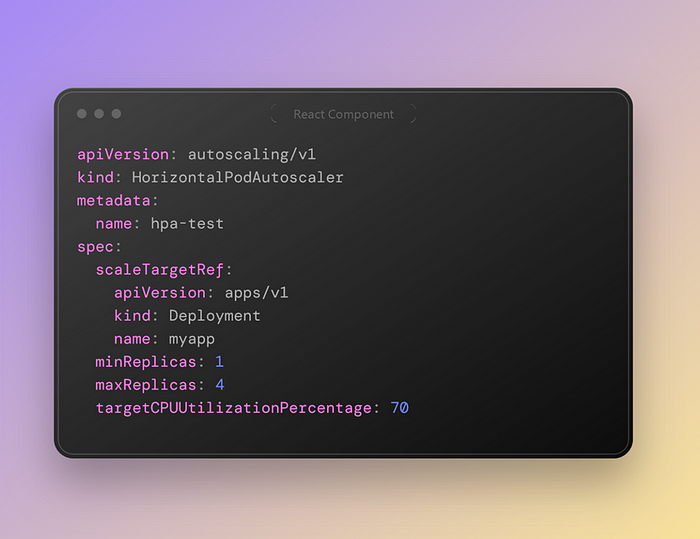

This is the manifest file for v1

In this approach we have defined the API Version of our deployment object and the name of it.

We have demonstrated the minimum replicas of the pointed deployment and the maximum replicas, although the target CPU usage is 70 percent. It means if the CPU usage was higher than 70% the replicas would be scaled up to 4 replicas and after decreasing CPU usage under 70%, the replicas numbers will be 1 again.

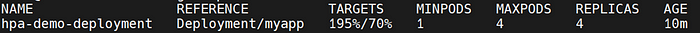

In my example, I have added “stress” package to the base image and increased CPU usage but you can do it with http request or any other way based on your application. I have entered the container environment and ran this command “stress -c 10” which simulates like 10 core CPU.

This is the result after some seconds and the pods scaled up until 4. The average container resource is like 70% and after reaching the threshold, the replicas would scale up to 4 pods.

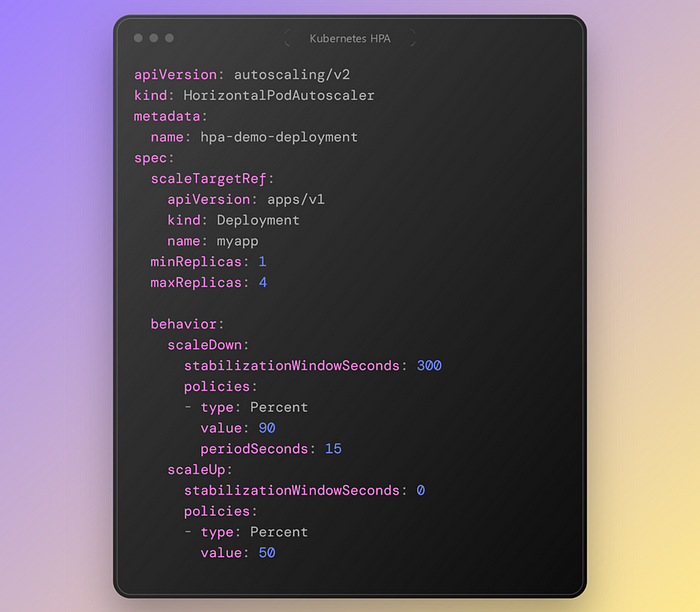

Autoscaling version 2 is the new and better approach which gives you more accessibility on pods and lets you assign different policy on the HPA.

On the autoscaling/v2 you can still see min and max replicas keys and they have same behavior. But the most important feature is behavior. Behavior is divided into two scale down and scale up section which lets you define policies for both up and down scale.

As it seems in the scale up policy section If the pod`s CPU usage became higher that 50 percentage, after 0 seconds the pods will be scaled up to 4 replicas. But in the scale down part if the CPU usage would be lower than 90% and yet after 15 seconds and staying the usage stable for 300 seconds, the pods would scale down to one replica.

4. Extra points

There are some other points in the v2 API version which I should mention.

Look at this example:

behavior: scaleDown: policies: - type: Percent value: 10 periodSeconds: 60 - type: Pods value: 5 periodSeconds: 60 selectPolicy: Min

We have two policies here, to ensure that no more than 5 Pods are removed per minute, you can add a second scale-down policy with a fixed size of 5, and set selectPolicy to minimum. Setting selectPolicy to Min means that the autoscaler chooses the policy that affects the smallest number of Pods.

selectPolicy: Min

If you set selectPolicy to “Min,” the HPA will select the policy with the minimum value among the matching policies during a scaling decision.

Example: If you have two policies for scaling up based on CPU utilization, one with a percentage increase of 150% and another with a pod count increase of 5, the policy with the minimum increase (5 in this case) will be selected.

selectPolicy: Max

Conversely, if you set selectPolicy to “Max,” the HPA will select the policy with the maximum value among the matching policies during a scaling decision.

Example: Using the same scenario as above, if you have two policies for scaling up based on CPU utilization, one with a percentage increase of 150% and another with a pod count increase of 5, the policy with the maximum increase (150% in this case) will be selected.

The selectPolicy value of Disabled turns off scaling the given direction. So to prevent downscaling policy would be used.

Authors: T. C. OkennaRegister for this course: Enrol Now

Kubernetes on AWS: Step by Step

In this article we provide step-by-step instructions for several common ways to set up a Kubernetes cluster on AWS:

- Creating a cluster with kobs — kops is a production-grade tool used to install, upgrade and manage Kubernetes on AWS.

- Creating a cluster with Amazon Elastic Kubernetes Service (EKS) — the managed Kubernetes service provided by Amazon. You can create a Kubernetes cluster with EKS using the AWS Management Console.

- Creating a cluster with Rancher — Rancher is a Kubernetes management platform that eases the deployment of Kubernetes and containers.

Deploying Kubernetes on AWS Using Kops

Kops is a production-grade tool used to install, upgrade, and operate highly available Kubernetes clusters on AWS and other cloud platforms using the command line.

Installing a Kubernetes Cluster on AWS

Before proceeding, make sure to have installed kubectl , kops , and AWS CLI tools.

Configure AWS Client with Access Credentials

Make sure AWS IAM user has the following permissions for kops to function properly:

– AmazonEC2FullAccess

– AmazonRoute53FullAccess

– AmazonS3FullAccess

– IAMFullAccess

– AmazonVPCFullAccess

Configure AWSCLIi with this user’s credentials by running:

# aws configure

Create S3 Bucket for Cluster State Storage

Create a dedicated S3 bucket that will be used by kops to store the state representing the cluster. We’ll name this bucket my-cluster-state :

# aws s3api create-bucket --bucket my-cluster-state

Make sure to activate bucket versioning to be able to later recover or revert to a previous state:

# aws s3api put-bucket-versioning --bucket my-cluster-state --versioning-configuration Status=Enabled

DNS Setup

On the DNS side, you can go with either public or private DNS. For public DNS, a valid top-level domain or subdomain is required to create the cluster. DNS is required by worker nodes to discover the master and by the master to discover all the etcd servers. A domain whose registrar is not AW creates a Route 53 hosted zone on AWS and changes nameserver records on your registrar accordingly.

In this example,e we’ll be using a simple, private DNS to create a gossip-based cluster . The only requirement to set this up is for our cluster name to end with k8s.local.

Creating the Kubernetes Cluster

The following command will create a 1 master (an m3.medium instance) and 2 nodes (two t2.medium instances) cluster in us-west-2a availability zone:

# kops create cluster --name my-cluster.k8s.local --zones us-west-2a --dns private --master-size=m3.medium --master-count=1 --node-size=t2.medium --node-count=2 --state s3://my-cluster-state --yes

Some of the command options in the above example have default values: --master-size , --master-count , --node-size , and --node-count . We’ve used the default values so the result would be the same if we hadn’t specified those options. Also,o note that kops will create one master node in each availability zone specified, so this option: --zones us-west-2a,us-west-2b would result in 2 master nodes, one in each of the two zones (even if --master-count was not specified in the command line).

Note that cluster creation may take a while as instances must boot, download the standard Kubernetes components and reach a “ready” state. Kops provides a command to check the state of the cluster and check it’s ready:

# kops validate cluster --state=s3://my-cluster-state Using cluster from kubectl context: my-cluster.k8s.local Validating cluster my-cluster.k8s.local INSTANCE GROUPS NAME ROLE MACHINETYPE MIN MAX SUBNETS master-us-west-2a Master m3.medium 1 1 us-west-2a nodes Node t2.medium 2 2 us-west-2a NODE STATUS NAME ROLE READY ip-172-20-32-203.us-west-2.compute.internal node True ip-172-20-36-109.us-west-2.compute.internal node True ip-172-20-61-137.us-west-2.compute.internal master True Your cluster my-cluster.k8s.local is ready

If you want to make some changes to the cluster, do so by running:

# kops edit cluster my-cluster.k8s.local # kops update cluster my-cluster.k8s.local --yes

Upgrading the Cluster to a Later Kubernetes Release

Kops can upgrade an existing cluster (master and nodes) to the latest recommended release of Kubernetes without having to specify the exact version. Kops supports rolling cluster upgrades where the master and worker nodes are upgraded one by one.

1. Update Kubernetes

# kops upgrade cluster --name $NAME --state s3://my-cluster-state --yes

2. Update the state store to match the cluster state.

# kops update cluster --name my-cluster.k8s.local --state s3://my-cluster-state --yes

3. Perform the rolling update.

# kops rolling-update cluster --name my-cluster.k8s.local --state s3://my-cluster-state --yes

This will perform updates on all instances in the cluster, first master and then workers.

Delete the Cluster

To destroy an existing cluster that we used for experimenting or trials, for example, we can run:

# kops delete cluster my-cluster.k8s.local --state=s3://my-cluster-state --yes

Using Kubernetes EKS Managed Service

Amazon Elastic Container Service for Kubernetes (EKS) is a fully managed service that takes care of all the cluster setup and creation, ensuring multi-AZ support on all clusters and automatic replacement of unhealthy instances (master or worker nodes).

By default clusters in EKS consist of 3 masters spread across 3 different availability zones to protect against the failure of a single AWS availability zone:

Standing up a new Kubernetes cluster with EKS can be done simply using the AWS Management Console. After getting access to the cluster, containerized applications can be scheduled in the new cluster in the same fashion as with any other Kubernetes installation:

Launching Kubernetes on EC2 Using Rancher

Rancher is a complete container management platform that eases the deployment of Kubernetes and containers.

Setting Up Rancher in AWS

Rancher (the application) runs on RancherOS, which is available as an Amazon Machine Image (AMI), and thus can be deployed on an EC2 instance.

Create RancherOS Instance on EC2

After installing and configuring the AWS CLI tool, proceed to create an EC2 instance using RancherOS AMI. Check RancherOS documentation for AMI ids for each region. For example this command:

$ aws ec2 run-instances --image-id ami-12db887d --count 1 --instance-type t2.micro --key-name my-key-pair --security-groups my-sg

will create one new t2.micro EC2 instance with RancherOS on ap-south-1 AWS region. Make sure to use the correct key name and security group. Also,o make sure the security group enables traffic to TCP port 8080 to the new instance.

Start Rancher Server

When the new instance is ready, just connect using ssh and start the Rancher server:

$ sudo docker run --name rancher-server -d --restart=unless-stopped -p 8080:8080 rancher/server:stable

This might take a few minutes. Once done, the UI can be accessed on port 8080 of the EC2 instance . Since by default anyone can access Rancher’s UI and API, it is recommended to set up access control.

Creating a Kubernetes cluster via Rancher in AWS

Configure Kubernetes environment template

An environment in Rancher is a logical entity for sharing deployments and resources with different sets of users. Environments are created from templates.

Create the Kubernetes Cluster (environment)

Adding a Kubernetes environment is just a matter of selecting the adequately configured template for our use case and inputting the cluster name. If access control is turned on, we can add members and select their membership role . Anyone added to the membership list would have access to the environment.

Add Hosts to Kubernetes Cluster

We need to add at least one host to the newly created Kubernetes environment. In this case, the hosts will be previously created AWS EC2 instances.

Once the first host has been added, Rancher will automatically start the deployment of the infrastructure (master) including Kubernetes services (i.e. kubelet, etcd,Kubee-proxy, etc). Hosts that will be used as Kubernetes nodes will require TCP ports 10250 and 10255 to be open for kubectl. Make sure to review the full list of Rancher requirements for the hosts.

It might take a few minutes for the Kubernetes cluster setup/update to complete, after adding hosts to the Kubernetes environment:

Deploying Applications in the Kubernetes Cluster

Once the cluster is ready containerized applications can be deployed using either the Rancher application catalog or kubectl.

Other Options for Deploying Kubernetes in the Cloud

Besides the Kubernetes deployment options already covered, other tools can be used to deploy Kubernetes on public clouds like AWS. Each tool has its unique features and building blocks:

- Heptio — Heptio provides a solution based on CloudFormation and kubeadm to deploy Kubernetes on AWS, and supports multi-AZ. Heptio is suitable for users already familiar with the CloudFormation AWS orchestration tool.

- Kismatic Enterprise Toolkit (KET) — KET is a collection of tools with sensible defaults which are production-ready to create enterprise-tuned clusters of Kubernetes.

- kubeadm — The kubeadm project is focused on a solution to build a simple cluster on AWS using Terraform. It is an adequate tool for tests and proofs-of-concept only as it doesn’t support multi-AZ and other advanced

Register for this course: Enrol Now

Testing Kubernetes Application

Kubernetes enabled the use of a Cloud Native model to build, deploy and scale applications. But it also introduces some challenges on how to test these application, or rather modules, effectively.

What tests can I use for my microservices?

There are common types of test that you can run with any application, but microservices architecture that run on Kubernetes introduce a new set of scenarios that need to be tested as well, making the list of the types of tests you can run for your microservices architecture longer.

Here's a list of test types:

- Unit Tests: These tests focus on individual components or functions of a microservice. They are usually performed during the development phase and don't involve Kubernetes.

- Integration Tests: Integration tests check if different components of an application work together as expected. They can involve testing communication between microservices or databases and interactions with external APIs.

- End-to-End (E2E) Tests: E2E tests simulate user workflows and verify that the entire application functions as expected from the user's perspective. These tests often require a Kubernetes environment to run the application components.

- Performance or Load Tests: Performance tests assess the application's ability to handle load and maintain responsiveness under various conditions. These tests may involve stress testing, load testing, and benchmarking on a Kubernetes cluster.

- Resilience Tests or Chaos Tests: This type of tests evaluate an application's ability to recover from failures and continue functioning. They can involve chaos testing, failover testing, and disaster recovery testing on a Kubernetes cluster.

Challenges of running tests in Kubernetes

There are amazing tools that can run multiple types of types of tests in Kubernetes, but they all have similar challenges that you might face when running these tests.

These challenges include:

- Managing long pipeline of tests: putting all your test in a single monolith CI/CD pipeline can slow down heavily the Integration and Deployment of the microservices.

- Storing the result of tests: all test tools generate artifacts, these could be text based or it could be screenshot or a video of your app. You will have to think of how to store these artifacts in order to retrieve them and see what went wrong.

- Knowing when to run a test: usually tests are run in a CI/CD pipeline, but not every type of test should be run with every execution of CI/CD, for example, compute-intensive tests or load tests should be run in your CI/CD pipeline.

- Retriggering of tests: if your tests live in a CI/CD pipeline and you want to retrigger a test, you will have to retrigger the entire CI/CD pipeline. This can incur huge wait time specially if your pipelines are long.

These are some of the tests challenges we faced when building out application with a microservice architecture. The right strategy to operationally manage these tests should help scale your testing efforts in your DevOps lifecycle.

Where and when can I run my tests?

Not all the tests are run at the same time and from the same place. For different scenarios and test types there's an optimal time in the DevOps lifecycle where you can test the application.

- Unit tests: These tests usually have a fast feedback loop that would enable developers to run them in their machines in seconds and once deployed, they can run in your Continuous Integration pipeline quickly.

- Integration tests: These tests are usually also executed in the Continuous Integration pipeline as they tend to be relatively fast and if well

- End-to-End (E2E) tests: E2E tests . These tests tend to test out the entire system so you want to be flexible on when to run them. Your CI/CD pipeline can be a place to hold these tests, but they tend to overcomplicate how you orchestrate the execution of the test and the retrieval of the results.

- Performance or Load tests: These tests can be scheduled at specific intervals during the development cycle or triggered by specific events such as a new release candidate (or manually ;)). Performance tests should be executed in an environment that closely resembles the production environment to accurately gauge the application's performance under real-world conditions.

What tools can I use to test my microservices?

As described earlier that are different types of tests that you can run in Kubernetes and at different

- Artillery: Artillery is a load testing tool that can be used to simulate high traffic on a Kubernetes application. It can help you identify performance issues, bottlenecks, and other issues that may arise under heavy load.

- Curl: Curl is a command-line tool that can be used to make HTTP requests to Kubernetes resources like pods, services, and deployments. You can use it to test connectivity and troubleshoot issues with Kubernetes resources.

- Cypress: Cypress is an end-to-end testing tool that can be used to test the functionality of your Kubernetes application. It can help you identify and fix issues with your application's user interface and workflows.

- Ginkgo: Ginkgo is a testing framework that can be used to write and run automated tests for your Kubernetes application. It's designed to be highly configurable and can be used to test a variety of different scenarios.

- Gradle: Gradle is a build automation tool that can be used to build and deploy your Kubernetes application. It can help you manage dependencies, run tests, and package your application for deployment.

- JMeter: JMeter is a load testing tool that can be used to simulate high traffic on your Kubernetes application. It can help you identify performance issues and bottlenecks under different load conditions.

- K6: K6 is another load testing tool that can be used to test the performance of your Kubernetes application. It's designed to be developer-friendly and can be used to write and run tests in JavaScript.

- KubePug: KubePug is a tool that can be used to scan your Kubernetes manifests for potential security vulnerabilities. It can help you identify security issues before they become a problem.

- Maven: Maven is a build automation tool that can be used to build and deploy your Kubernetes application. It's designed to be highly configurable and can be used to manage dependencies, run tests, and package your application for deployment.

- Playwright: Playwright is an end-to-end testing tool that can be used to test the functionality of your Kubernetes application. It's designed to be highly configurable and can be used to test a variety of different scenarios.

- Postman: Postman is a tool that can be used to test your Kubernetes API endpoints. It's designed to be highly configurable and can be used to test a variety of different scenarios.

- SoapUI: SoapUI is a tool that can be used to test SOAP and REST web services. It can help you test the functionality of your Kubernetes application's API endpoints and identify issues with your application's data exchange.

Conclusion

In conclusion, Kubernetes has revolutionized the way we build, deploy, and scale applications using a Cloud Native model. However, it also brings unique challenges in testing microservices effectively.

To overcome these challenges and scale testing efforts in your DevOps lifecycle, it's essential to adopt the right strategy for managing and executing tests. By doing so, you can ensure the reliability, performance, and security of your Kubernetes-based microservices applications.

Authors: T. C. Okenna, TestkubeRegister for this course: Enrol Now

Introduction to Kubernetes (K8S)

Before Kubernetes, developers used Docker to package and run their applications inside containers. Docker made creating and running a single container easy, but it became hard to manage many containers running across different machines. For example, what if one container crashes? How do you restart it automatically? Or how do you handle hundreds of containers that need to work together?

That’s why Kubernetes was created. It helps manage and organize containers automatically. Kubernetes makes sure your containers keep running, can scale up when there’s more traffic, and can move to healthy machines if something goes wrong.

What is Kubernetes?

Kubernetes (also called K8s) is an open-source platform that helps you automates the deployment, scaling, and management of containerized applications. In simple words, if you're running a lot of apps using containers (like with Docker), Kubernetes helps you organize and control them efficiently just like a traffic controller for your apps.

You tell Kubernetes what your app should look like (how many copies to run, what to do if something fails, etc.), and Kubernetes takes care of the rest making sure everything is up and running properly.

Example

Let’s say you created a food delivery app and it runs in a container using Docker. When your app becomes popular and thousands of users start placing orders, you need to run more copies of it to handle the load.

Instead of doing this manually, you use Kubernetes to say:

"Hey Kubernetes, always keep 5 copies of my app running. If one stops, replace it. And if more users come, increase the number of copies automatically."

Kubernetes listens to this instruction and makes it happen automatically.

Key Terminologies

Think of Kubernetes as a well-organized company where different teams and systems work together to run applications efficiently. Here’s how the key terms fit into this system:

1. Pod

A Pod is the smallest unit you can deploy in Kubernetes. It wraps one or more containers that need to run together, sharing the same network and storage. Containers inside a Pod can easily communicate and work as a single unit.

2. Node

A Node is a machine (physical or virtual) in a Kubernetes cluster that runs your applications. Each Node contains the tools needed to run Pods, including the container runtime (like Docker), the Kubelet (agent), and the Kube proxy (networking).

3. Cluster

A Kubernetes cluster is a group of computers (called nodes) that work together to run your containerized applications. These nodes can be real machines or virtual ones.

There are two types of nodes in a Kubernetes cluster:

- Master node (Control Plane):

- Think of it as the brain of the cluster.

- It makes decisions, like where to run applications, handles scheduling, and keeps track of everything.

- Worker nodes:

- These are the machines that actually run your apps inside containers.

- Each worker node has a Kubelet (agent), a container runtime (like Docker or containerd), and tools for networking and monitoring.

4. Deployment

A Deployment is a Kubernetes object used to manage a set of Pods running your containerized applications. It provides declarative updates, meaning you tell Kubernetes what you want, and it figures out how to get there.

5. ReplicaSet

A ReplicaSet ensures that the right number of identical Pods are running.

6. Service

A Service in Kubernetes is a way to connect applications running inside your cluster. It gives your Pods a stable way to communicate, even if the Pods themselves keep changing.

7. Ingress

Ingress is a way to manage external access to your services in a Kubernetes cluster. It provides HTTP and HTTPS routing to your services, acting as a reverse proxy.

8. ConfigMap

A ConfigMap stores configuration settings separately from the application, so changes can be made without modifying the actual code.

Imagine you have an application that needs some settings, like a database password or an API key. Instead of hardcoding these settings into your app, you store them in a ConfigMap. Your application can then read these settings from the ConfigMap at runtime, which makes it easy to update the settings without changing the app code.

9. Secret

A Secret is a way to store sensitive information (like passwords, API keys, or tokens) securely in a Kubernetes cluster.

10. Persistent Volume (PV)

A Persistent Volume (PV) in Kubernetes is a piece of storage in the cluster that you can use to store data — and it doesn’t get deleted when a Pod is removed or restarted.

11. Namespace

A Namespace is like a separate environment within your Kubernetes cluster. It helps you organize and isolate your resources like Pods, Services, and Deployments.

12. Kubelet

A Kubelet runs on each Worker Node and ensures Pods are running as expected.

13. Kube-proxy

Kube-proxy manages networking inside the cluster, ensuring different Pods can communicate.

Benefits of using Kubernetes

1. Automated Deployment and Management

- If you are using Kubernetes for deploying the application then no need for manual intervention kubernetes will take care of everything like automating the deployment, scaling, and containerizing the application.

- Kubernetes will reduce the errors that can be made by humans which makes the deployment more effective.

2. Scalability

- You can scale the application containers depending on the incoming traffic Kubernetes offers Horizontal pod scaling the pods will be scaled automatically depending on the load.

3. High Availability

- You can achieve high availability for your application with the help of Kubernetes and also it will reduce the latency issues for the end users.

4. Cost-Effectiveness

- If there is unnecessary use of infrastructure the cost will also increase kubernetes will help you to reduce resource utilization and control the overprovisioning of infrastructure.

5. Improved Developer Productivity

- Developers can focus on writing code rather than managing deployments, as Kubernetes automates much of the deployment and scaling process

Deploying and Managing Containerized Applications with Kubernetes

Follow the steps mentioned below to deploy the application in the form of containers.

Step 1: Install Kubernetes and setup Kubernetes cluster there should be minimum at least one master node and two worker nodes you can set up the Kubernetes cluster in any of the cloud which are providing the Kubernetes as an service.

Step 2: Now, create a deployment manifest file. In this file, you specify the desired number of Pods, the container image, and the resources required. After creating the manifest, apply it using the kubectl command.

Step 3: After creating the pods know you need to expose the service to the outside for that you need to write one more manifest file which contains service type (e.g., Load Balancer or Cluster IP), ports, and selectors.

Use Cases of Kubernetes in Real-World Scenarios

Following are the some of the use cases of kuberneets in real-world scenarios

1. E-commerce

You deploy and manage the e-commerce websites by autoscaling and load balancing you can manage the millions of users and transactions.

2. Media and Entertainment

You can store the static and dynamic data can deliver it to the across the world with out any latency to the end users.

3. Financial Services

Kubernetes is well suited for the critical application because of the level of security it is offering.

4. Healthcare

You can store the data of patient and take care the outcomes of the health of patient.

Kubernetes v/s Other Container Orchestration Platforms

|

Feature |

Kubernetes |

Docker Swarm |

OpenShift |

Nomad |

|---|---|---|---|---|

|

Deployment |

Container were deployed using the Kubectl CLI and all the configuration required for the containers will be mentioned in the manifests. |

Containers are deployed using docker compose file which contains all the configurations required for the containers. |

You can deploy the containers using the manifests or openshift cli. |

HCL configuration file is required to deploy the containers. |

|

Scalability |

You can manage the heavy incoming traffic by scaling the pods across the multiple nodes. |

We can scale the containers but not as much as efficient as the kubernetes. |

We can scale the containers but not as much as efficient as the kubernetes. |

We can scale the containers but not as much as efficient as the kubernetes. |

|

Networking |

You can use different types of plugins to increase the flexibility. |

simple to use which makes more easy then kubernetes. |

Networking model is very much advanced. |

You can integrate the no.of plugins you want. |

|

Storage |

Supports multiple storage options like persistent volume claim and you can even attach the cloud based storage. |

You can use the local storage more flexibly. |

Supports local and cloud storage. |

Supports local and cloud storage. |

Features of Kubernetes

The following are some important features of Kubernetes:

1. Automated Scheduling

Kubernetes provides an advanced scheduler to launch containers on cluster nodes. It performs resource optimization.

2. Self-Healing Capabilities

It provides rescheduling, replacing, and restarting the containers that are dead.

3. Automated Rollouts and Rollbacks

It supports rollouts and rollbacks for the desired state of the containerized application.

4. Horizontal Scaling and Load Balancing

Kubernetes can scale up and scale down the application as per the requirements.

5. Resource Utilization

Kubernetes provides resource utilization monitoring and optimization, ensuring containers are using their resources efficiently.

6. Support for multiple clouds and hybrid clouds

Kubernetes can be deployed on different cloud platforms and run containerized applications across multiple clouds.

7. Extensibility

Kubernetes is very extensible and can be extended with custom plugins and controllers.

8. Community Support

Kubernetes has a large and active community with frequent updates, bug fixes, and new features being added.

Kubernetes v/s Docker

The following table shows the comparison between Kubernetes vs Docker

|

Feature |

Docker |

Kubernetes |

|---|---|---|

|

Purpose |

A containerization platform to build, ship, and run containers. |

A container orchestration tool that manages, deploys, and scales containerized applications. |

|

Developed By |

Docker Inc. |

Originally by Google, now managed by CNCF. |

|

Container Management |

Manages individual containers. |

Manages multiple containers across a cluster. |

|

Scaling |

Manual scaling of containers using docker run or docker-compose. |

Auto-scaling with Horizontal Pod Autoscaler (HPA). |

|

Networking |

Uses a single-host bridge network by default. |

Uses a cluster-wide network to connect services across multiple nodes. |

|

Load Balancing |

Basic load balancing via Docker Swarm. |

Advanced load balancing with Services and Ingress. |

|

Self-Healing |

Containers need to be restarted manually if they fail. |

Automatically replaces failed containers (Pods). |

|

Rolling Updates |

Not natively supported; requires recreating containers manually. |

Supports zero-downtime rolling updates for applications. |

|

Storage |

Local persistent storage. |

Persistent storage with Persistent Volumes (PV) & Persistent Volume Claims (PVC). |

|

Cluster Management |

Limited to Docker Swarm (less complex, but less powerful than Kubernetes). |

Manages large-scale distributed systems with multiple nodes |

|

Use Case |

Best for developing and running containerized apps on a single machine. |

Best for running, managing, and scaling containerized applications across multiple machines (clusters). |

Architecture of Kubernetes

Kubernetes follows the client-server architecture where we have the master installed on one machine and the node on separate Linux machines. It follows the master-slave model, which uses a master to manage Docker containers across multiple Kubernetes nodes. A master and its controlled nodes(worker nodes) constitute a “Kubernetes cluster”. A developer can deploy an application in the docker containers with the assistance of the Kubernetes master.

.jpg)

Key Components of Kubernetes

1. Kubernetes-Master Node Components

Kubernetes master is responsible for managing the entire cluster, coordinates all activities inside the cluster, and communicates with the worker nodes to keep the Kubernetes and your application running. This is the entry point of all administrative tasks. When we install Kubernetes on our system we have four primary components of Kubernetes Master that will get installed. The components of the Kubernetes Master node are:

API Server

The API server is the entry point for all the REST commands used to control the cluster. All the administrative tasks are done by the API server within the master node. If we want to create, delete, update or display in Kubernetes object it has to go through this API server.API server validates and configures the API objects such as ports, services, replication, controllers, and deployments and it is responsible for exposing APIs for every operation. We can interact with these APIs using a tool called kubectl. 'kubectl' is a very tiny go language binary that basically talks to the API server to perform any operations that we issue from the command line. It is a command-line interface for running commands against Kubernetes clusters

Scheduler

It is a service in the master responsible for distributing the workload. It is responsible for tracking the utilization of the working load of each worker node and then placing the workload on which resources are available and can accept the workload. The scheduler is responsible for scheduling pods across available nodes depending on the constraints you mention in the configuration file it schedules these pods accordingly. The scheduler is responsible for workload utilization and allocating the pod to the new node.

Controller Manager

Also known as controllers. It is a daemon that runs in a non terminating loop and is responsible for collecting and sending information to the API server. It regulates the Kubernetes cluster by performing lifestyle functions such as namespace creation and lifecycle event garbage collections, terminated pod garbage collection, cascading deleted garbage collection, node garbage collection, and many more. Basically, the controller watches the desired state of the cluster if the current state of the cluster does not meet the desired state then the control loop takes the corrective steps to make sure that the current state is the same as that of the desired state. The key controllers are the replication controller, endpoint controller, namespace controller, and service account, controller. So in this way controllers are responsible for the overall health of the entire cluster by ensuring that nodes are up and running all the time and correct pods are running as mentioned in the specs file.

etcd

It is a distributed key-value lightweight database. In Kubernetes, it is a central database for storing the current cluster state at any point in time and is also used to store the configuration details such as subnets, config maps, etc. It is written in the Go programming language.

2. Kubernetes-Worker Node Components

Kubernetes Worker node contains all the necessary services to manage the networking between the containers, communicate with the master node, and assign resources to the containers scheduled. The components of the Kubernetes Worker node are:

Kubelet

It is a primary node agent which communicates with the master node and executes on each worker node inside the cluster. It gets the pod specifications through the API server and executes the container associated with the pods and ensures that the containers described in the pods are running and healthy. If kubelet notices any issues with the pods running on the worker nodes then it tries to restart the pod on the same node. If the issue is with the worker node itself then the Kubernetes master node detects the node failure and decides to recreate the pods on the other healthy node.

Kube-Proxy

It is the core networking component inside the Kubernetes cluster. It is responsible for maintaining the entire network configuration. Kube-Proxy maintains the distributed network across all the nodes, pods, and containers and exposes the services across the outside world. It acts as a network proxy and load balancer for a service on a single worker node and manages the network routing for TCP and UDP packets. It listens to the API server for each service endpoint creation and deletion so for each service endpoint it sets up the route so that you can reach it.

Pods

A pod is a group of containers that are deployed together on the same host. With the help of pods, we can deploy multiple dependent containers together so it acts as a wrapper around these containers so we can interact and manage these containers primarily through pods.

Docker

Docker is the containerization platform that is used to package your application and all its dependencies together in the form of containers to make sure that your application works seamlessly in any environment which can be development or test or production. Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Docker is the world's leading software container platform. It was launched in 2013 by a company called Dot cloud. It is written in the Go language. It has been just six years since Docker was launched yet communities have already shifted to it from VMs. Docker is designed to benefit both developers and system administrators making it a part of many DevOps toolchains. Developers can write code without worrying about the testing and production environment. Sysadmins need not worry about infrastructure as Docker can easily scale up and scale down the number of systems. Docker comes into play at the deployment stage of the software development cycle.

Application of Kubernetes

The following are some application of Kubernetes:

1. Microservices Architecture

Kubernetes is well-suited for managing microservices architectures, which involve breaking down complex applications into smaller, modular components that can be independently deployed and managed.

2. Cloud-native Development

Kubernetes is a key component of cloud-native development, which involves building applications that are designed to run on cloud infrastructure and take advantage of the scalability, flexibility, and resilience of the cloud.

3. Continuous Integration and Delivery

Kubernetes integrates well with CI/CD pipelines, making it easier to automate the deployment process and roll out new versions of your application with minimal downtime.

4. Hybrid and Multi-Cloud Deployments

Kubernetes provides a consistent deployment and management experience across different cloud providers, on-premise data centers, and even developer laptops, making it easier to build and manage hybrid and multi-cloud deployments.

5. High-Performance Computing

Kubernetes can be used to manage high-performance computing workloads, such as scientific simulations, machine learning, and big data processing.

6. Edge Computing

Kubernetes is also being used in edge computing applications, where it can be used to manage containerized applications running on edge devices such as IoT devices or network appliances.

Register for this course: Enrol Now

Continuous Integration and Continuous Delivery

Continuous integration (CI) and continuous delivery (CD) are key pillars of modern development. If you’re new to these concepts, here’s a quick rundown:

- Continuous integration (CI): Developers frequently commit their code to a shared repository, triggering automated builds and tests. This practice prevents conflicts and ensures defects are caught early.

- Continuous delivery (CD): With CI in place, organizations can then confidently automate releases. That means shorter release cycles, fewer surprises, and the ability to roll back changes quickly if needed.

Leveraging CI/CD can dramatically improve your team’s velocity and quality. Once you experience the benefits of dependable, streamlined pipelines, there’s no going back.

Why combine Docker and Jenkins for CI/CD?

Docker allows you to containerize your applications, creating consistent environments across development, testing, and production. Jenkins, on the other hand, helps you automate tasks such as building, testing, and deploying your code. I like to think of Jenkins as the tireless “assembly line worker,” while Docker provides identical “containers” to ensure consistency throughout your project’s life cycle.

Here’s why blending these tools is so powerful:

- Consistent environments: Docker containers guarantee uniformity from a developer’s laptop all the way to production. This consistency reduces errors and eliminates the dreaded “works on my machine” excuse.

- Speedy deployments and rollbacks: Docker images are lightweight. You can ship or revert changes at the drop of a hat — perfect for short delivery process cycles where minimal downtime is crucial.

- Scalability: Need to run 1,000 tests in parallel or support multiple teams working on microservices? No problem. Spin up multiple Docker containers whenever you need more build agents, and let Jenkins orchestrate everything with Jenkins pipelines.

For a DevOps junkie like me, this synergy between Jenkins and Docker is a dream come true.

Setting up your CI/CD pipeline with Docker and Jenkins

Before you roll up your sleeves, let’s cover the essentials you’ll need:

- Docker Desktop (or a Docker server environment) installed and running. You can get Docker for various operating systems.

- Jenkins downloaded from Docker Hub or installed on your machine. These days, you’ll want jenkins/jenkins:lts (the long-term support image) rather than the deprecated library/jenkins image.

- Proper permissions for Docker commands and the ability to manage Docker images on your system.

- A GitHub or similar code repository where you can store your Jenkins pipeline configuration (optional, but recommended).

Pro tip: If you’re planning a production setup, consider a container orchestration platform like Kubernetes. This approach simplifies scaling Jenkins, updating Jenkins, and managing additional Docker servers for heavier workloads.

Building a robust CI/CD pipeline with Docker and Jenkins

After prepping your environment, it’s time to create your first Jenkins-Docker pipeline. Below, I’ll walk you through common steps for a typical pipeline — feel free to modify them to fit your stack.

1. Install necessary Jenkins plugins

Jenkins offers countless plugins, so let’s start with a few that make configuring Jenkins with Docker easier:

- Docker Pipeline Plugin

- Docker

- CloudBees Docker Build and Publish

How to install plugins:

- Open Manage Jenkins > Manage Plugins in Jenkins.

- Click the Available tab and search for the plugins listed above.

- Install them (and restart Jenkins if needed).

Code example (plugin installation via CLI):

|

1

2

3

4

|

# Install plugins using Jenkins CLI

java -jar jenkins-cli.jar -s http://<jenkins-server>:8080/ install-plugin docker-pipeline

java -jar jenkins-cli.jar -s http://<jenkins-server>:8080/ install-plugin docker

java -jar jenkins-cli.jar -s http://<jenkins-server>:8080/ install-plugin docker-build-publish

|

Pro tip (advanced approach): If you’re aiming for a fully infrastructure-as-code setup, consider using Jenkins configuration as code (JCasC). With JCasC, you can declare all your Jenkins settings — including plugins, credentials, and pipeline definitions — in a YAML file. This means your entire Jenkins configuration is version-controlled and reproducible, making it effortless to spin up fresh Jenkins instances or apply consistent settings across multiple environments. It’s especially handy for large teams looking to manage Jenkins at scale.

2. Set up your Jenkins pipeline

In this step, you’ll define your pipeline. A Jenkins “pipeline” job uses a Jenkinsfile (stored in your code repository) to specify the steps, stages, and environment requirements.

Example Jenkinsfile:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

pipeline {

agent any

stages {

stage('Checkout') {

steps {

git branch: 'main', url: 'https://github.com/your-org/your-repo.git'

}

}

stage('Build') {

steps {

script {

dockerImage = docker.build("your-org/your-app:${env.BUILD_NUMBER}")

}

}

}

stage('Test') {

steps {

sh 'docker run --rm your-org/your-app:${env.BUILD_NUMBER} ./run-tests.sh'

}

}

stage('Push') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'dockerhub-credentials') {

dockerImage.push()

}

}

}

}

}

}

|

Let’s look at what’s happening here:

- Checkout: Pulls your repository.

- Build: Creates a built docker image (your-org/your-app) with the build number as a tag.

- Test: Runs your test suite inside a fresh container, ensuring Docker containers create consistent environments for every test run.

- Push: Pushes the image to your Docker registry (e.g., Docker Hub) if the tests pass.

3. Configure Jenkins for automated builds

Now that your pipeline is set up, you’ll want Jenkins to run it automatically:

- Webhook triggers: Configure your source control (e.g., GitHub) to send a webhook whenever code is pushed. Jenkins will kick off a build immediately.

- Poll SCM: Jenkins periodically checks your repo for new commits and starts a build if it detects changes.

Which trigger method should you choose?

- Webhook triggers are ideal if you want near real-time builds. As soon as you push to your repo, Jenkins is notified, and a new build starts almost instantly. This approach is typically more efficient, as Jenkins doesn’t have to continuously check your repository for updates. However, it requires that your source control system and network environment support webhooks.

- Poll SCM is useful if your environment can’t support incoming webhooks — for example, if you’re behind a corporate firewall or your repository isn’t configured for outbound hooks. In that case, Jenkins routinely checks for new commits on a schedule you define (e.g., every five minutes), which can add a small delay and extra overhead but may simplify setup in locked-down environments.

Personal experience: I love webhook triggers because they keep everything as close to real-time as possible. Polling works fine if webhooks aren’t feasible, but you’ll see a slight delay between code pushes and build starts. It can also generate extra network traffic if your polling interval is too frequent.

4. Build, test, and deploy with Docker containers

Here comes the fun part — automating the entire cycle from build to deploy:

- Build Docker image: After pulling the code, Jenkins calls docker.build to create a new image.

- Run tests: Automated or automated acceptance testing runs inside a container spun up from that image, ensuring consistency.

- Push to registry: Assuming tests pass, Jenkins pushes the tagged image to your Docker registry — this could be Docker Hub or a private registry.

- Deploy: Optionally, Jenkins can then deploy the image to a remote server or a container orchestrator (Kubernetes, etc.).

This streamlined approach ensures every step — build, test, deploy — lives in one cohesive pipeline, preventing those “where’d that step go?” mysteries.

5. Optimize and maintain your pipeline

Once your pipeline is up and running, here are a few maintenance tips and enhancements to keep everything running smoothly:

- Clean up images: Routine cleanup of Docker images can reclaim space and reduce clutter.

- Security updates: Stay on top of updates for Docker, Jenkins, and any plugins. Applying patches promptly helps protect your CI/CD environment from vulnerabilities.

- Resource monitoring: Ensure Jenkins nodes have enough memory, CPU, and disk space for builds. Overworked nodes can slow down your pipeline and cause intermittent failures.

Pro tip: In large projects, consider separating your build agents from your Jenkins controller by running them in ephemeral Docker containers (also known as Jenkins agents). If an agent goes down or becomes stale, you can quickly spin up a fresh one — ensuring a clean, consistent environment for every build and reducing the load on your main Jenkins server.

Why use Declarative Pipelines for CI/CD?

Although Jenkins supports multiple pipeline syntaxes, Declarative Pipelines stand out for their clarity and resource-friendly design. Here’s why:

- Simplified, opinionated syntax: Everything is wrapped in a single pipeline { ... } block, which minimizes “scripting sprawl.” It’s perfect for teams who want a quick path to best practices without diving deeply into Groovy specifics.

- Easier resource allocation: By specifying an agent at either the pipeline level or within each stage, you can offload heavyweight tasks (builds, tests) onto separate worker nodes or Docker containers. This approach helps prevent your main Jenkins controller from becoming overloaded.

- Parallelization and matrix builds: If you need to run multiple test suites or support various OS/browser combinations, Declarative Pipelines make it straightforward to define parallel stages or set up a matrix build. This tactic is incredibly handy for microservices or large test suites requiring different environments in parallel.

- Built-in “escape hatch”: Need advanced Groovy features? Just drop into a script block. This lets you access Scripted Pipeline capabilities for niche cases, while still enjoying Declarative’s streamlined structure most of the time.

- Cleaner parameterization: Want to let users pick which tests to run or which Docker image to use? The parameters directive makes your pipeline more flexible. A single Jenkinsfile can handle multiple scenarios — like unit vs. integration testing — without duplicating stages.

Declarative Pipeline examples

Below are sample pipelines to illustrate how declarative syntax can simplify resource allocation and keep your Jenkins controller healthy.

Example 1: Basic Declarative Pipeline

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

pipeline {

agent any

stages {

stage('Build') {

steps {

echo 'Building...'

}

}

stage('Test') {

steps {

echo 'Testing...'

}

}

}

}

|

- Runs on any available Jenkins agent (worker).

- Uses two stages in a simple sequence.

Example 2: Stage-level agents for resource isolation

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

pipeline {

agent none // Avoid using a global agent at the pipeline level

stages {

stage('Build') {

agent { docker 'maven:3.9.3-eclipse-temurin-17' }

steps {

sh 'mvn clean package'

}

}

stage('Test') {

agent { docker 'openjdk:17-jdk' }

steps {

sh 'java -jar target/my-app-tests.jar'

}

}

}

}

|

- Each stage runs in its own container, preventing any single node from being overwhelmed.

- agent none at the top ensures no global agent is allocated unnecessarily.

Example 3: Parallelizing test stages

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

pipeline {

agent none

stages {

stage('Test') {

parallel {

stage('Unit Tests') {

agent { label 'linux-node' }

steps {

sh './run-unit-tests.sh'

}

}

stage('Integration Tests') {

agent { label 'linux-node' }

steps {

sh './run-integration-tests.sh'

}

}

}

}

}

}

|

- Splits tests into two parallel stages.

- Each stage can run on a different node or container, speeding up feedback loops.

Example 4: Parameterized pipeline

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

pipeline {

agent any

parameters {

choice(name: 'TEST_TYPE', choices: ['unit', 'integration', 'all'], description: 'Which test suite to run?')

}

stages {

stage('Build') {

steps {

echo 'Building...'

}

}

stage('Test') {

when {

expression { return params.TEST_TYPE == 'unit' || params.TEST_TYPE == 'all' }

}

steps {

echo 'Running unit tests...'

}

}

stage('Integration') {

when {

expression { return params.TEST_TYPE == 'integration' || params.TEST_TYPE == 'all' }

}

steps {

echo 'Running integration tests...'

}

}

}

}

|

- Lets you choose which tests to run (unit, integration, or both).

- Only executes relevant stages based on the chosen parameter, saving resources.

Example 5: Matrix builds

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

pipeline {

agent none

stages {

stage('Build and Test Matrix') {

matrix {

agent {

label "${PLATFORM}-docker"

}

axes {

axis {

name 'PLATFORM'

values 'linux', 'windows'

}

axis {

name 'BROWSER'

values 'chrome', 'firefox'

}

}

stages {

stage('Build') {

steps {

echo "Build on ${PLATFORM} with ${BROWSER}"

}

}

stage('Test') {

steps {

echo "Test on ${PLATFORM} with ${BROWSER}"

}

}

}

}

}

}

}

|

- Defines a matrix of PLATFORM x BROWSER, running each combination in parallel.

- Perfect for testing multiple OS/browser combinations without duplicating pipeline logic.

Using Declarative Pipelines helps ensure your CI/CD setup is easier to maintain, scalable, and secure. By properly configuring agents — whether Docker-based or label-based — you can spread workloads across multiple worker nodes, minimize resource contention, and keep your Jenkins controller humming along happily.

Best practices for CI/CD with Docker and Jenkins

Ready to supercharge your setup? Here are a few tried-and-true habits I’ve cultivated:

- Leverage Docker’s layer caching: Optimize your Dockerfiles so stable (less frequently changing) layers appear early. This drastically reduces build times.

- Run tests in parallel: Jenkins can run multiple containers for different services or microservices, letting you test them side by side. Declarative Pipelines make it easy to define parallel stages, each on its own agent.

- Shift left on security: Integrate security checks early in the pipeline. Tools like Docker Scout let you scan images for vulnerabilities, while Jenkins plugins can enforce compliance policies. Don’t wait until production to discover issues.

- Optimize resource allocation: Properly configure CPU and memory limits for Jenkins and Docker containers to avoid resource hogging. If you’re scaling Jenkins, distribute builds across multiple worker nodes or ephemeral agents for maximum efficiency.

- Configuration management: Store Jenkins jobs, pipeline definitions, and plugin configurations in source control. Tools like Jenkins Configuration as Code simplify versioning and replicating your setup across multiple Docker servers.

With these strategies — plus a healthy dose of Declarative Pipelines — you’ll have a lean, high-octane CI/CD pipeline that’s easier to maintain and evolve.

Troubleshooting Docker and Jenkins Pipelines

Even the best systems hit a snag now and then. Here are a few hurdles I’ve seen (and conquered):

- Handling environment variability: Keep Docker and Jenkins versions synced across different nodes. If multiple Jenkins nodes are in play, standardize Docker versions to avoid random build failures.

- Troubleshooting build failures: Use docker logs -f <container-id> to see exactly what happened inside a container. Often, the logs reveal missing dependencies or misconfigured environment variables.

- Networking challenges: If your containers need to talk to each other — especially across multiple hosts — make sure you configure Docker networks or an orchestration platform properly. Read Docker’s networking documentation for details, and check out the Jenkins diagnosing issues guide for more troubleshooting tips.

Conclusion

Pairing Docker and Jenkins offers a nimble, robust approach to CI/CD. Docker locks down consistent environments and lightning-fast rollouts, while Jenkins automates key tasks like building, testing, and pushing your changes to production. When these two are in harmony, you can expect shorter release cycles, fewer integration headaches, and more time to focus on developing awesome features.

A healthy pipeline also means your team can respond quickly to user feedback and confidently roll out updates — two crucial ingredients for any successful software project. And if you’re concerned about security, there are plenty of tools and best practices to keep your applications safe.

Authors: T. C. Okenna, Docker DocsRegister for this course: Enrol Now